Introduction

Last month, I followed the example of Full Stack Python and migrated this blog from Wordpress to Amazon Web Services (AWS) Simple Storage Service (S3). The S3 hosting approach gives me the following features:

- Global caching via Cloudfront's Content Delivery Network (CDN)

- Secure Hypertext Transport Protocol via AWS' certificate manager

- Configuration management and a public record of website updates and edits via Git

- Quick and easy code highlighting and hyperlinks via Markdown

- Detailed logs and usage statistics via Cloudwatch

- Insanely cheap hosting costs

- I accommodate about one hundred and forty (140) hits a day with CDN, DNS, hosting and logging for about three dollars ($3) a month

I configured Cloudwatch to dump the logs to a separate, dedicated log bucket. Cloudwatch dumps the logs in a raw format, so I need a separate Architecture to parse and analyze the logs.

Trade

In general, I need a service to ingest the logs, a service to parse/ transform the logs (i.e., create actionable key/value pairs), a service to store the key/value pairs and finally a Graphical User Interface (GUI) to view the logs.

Amazon does not provide a turnkey solution for this user story, so I faced two high level approaches:

- Roll your own

- Approach

- Deploy and Integrate separate services for ingest, transformation and analysis

- Technology

- The Elasticsearch, Logstash and Kibana (ELK) stack, which Elastic renamed to 'Elastic Stack'

- Deployment

- You can either deploy the Elastic stack via a combination of Elastic Compute Cloud (EC2) and the Amazon provided Elasticsearch service or the Elastic provided Elastic cloud

- Cost

- The Amazon approach costs ~$15/month and the cheapest Elastic cloud approach costs $45/month

- Effort

- The Amazon approach requires a significant amount of integration and troubleshooting whereas the Elastic cloud approach just requires the deployment of a few Logstash filters

- Approach

- Turn key

- Approach

- Use a push button online service to ingest, parse and analyze the logs

- Technology

- Loggly, Sumo Logic and s3stat

- Deployment

- All services provide a simple 'push button' deployment (note: deployment may require minimal Identity and Acess Management configurations)

- Cost

- Sumo Logic, s3stat and Loggly all provide free options

- Sumo Logic and Loggly limit retention to seven days for their free tier

- s3stat offers a very creative pricing model for their free teir, which I discuss below

- Effort

- All three options require very little effort

- Approach

NOTE: If you represent any of these companies and would like to update the bullets above, feel free to fork, edit and create a pull request for this blog post.

S3STAT

I decided to try s3stat because their cheap bastard plan amuses me. From their website:

How It Works

Sign up for a Free Trial and try out the product (making sure you actually want to use it)

Blog about S3STAT, explaining to the world how awesome the product is, and how generous we are being to give it to a deadbeat like yourself for free.

Send us an email showing us where to find that blog post.

Get Hooked Up with a free, unlimited license for S3STAT.

Test Drive

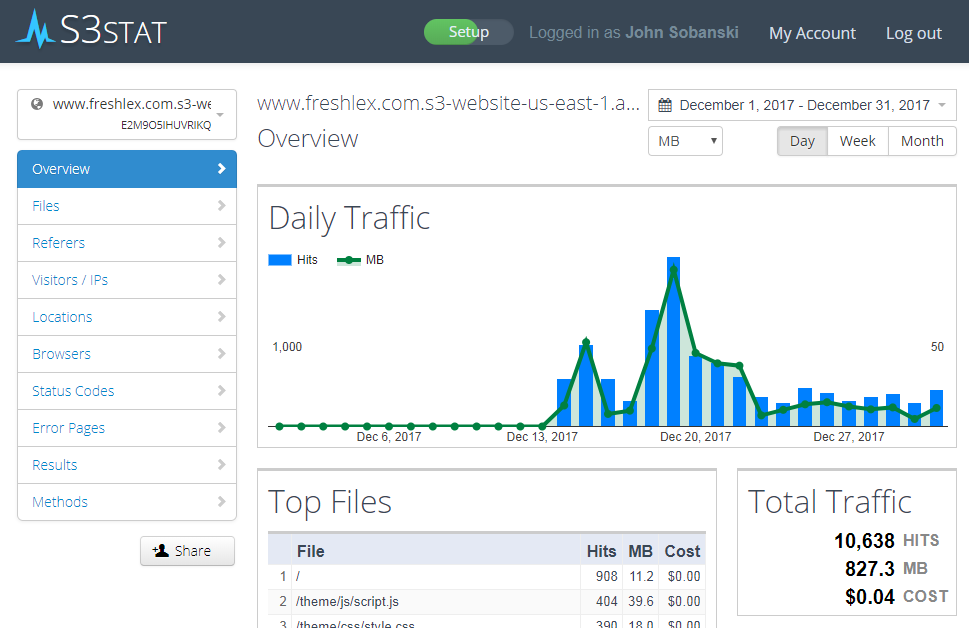

It took about thirty seconds to connect s3stat to my Cloudfront S3 logs bucket. s3stat provides both a wizard and web app to help you get started. Once I logged in, I saw widgets for Daily Traffic, Top Files, Total Traffic, Daily Average and Daily Unique. s3stat also provides the costs to your AWS account.

Troubleshooting

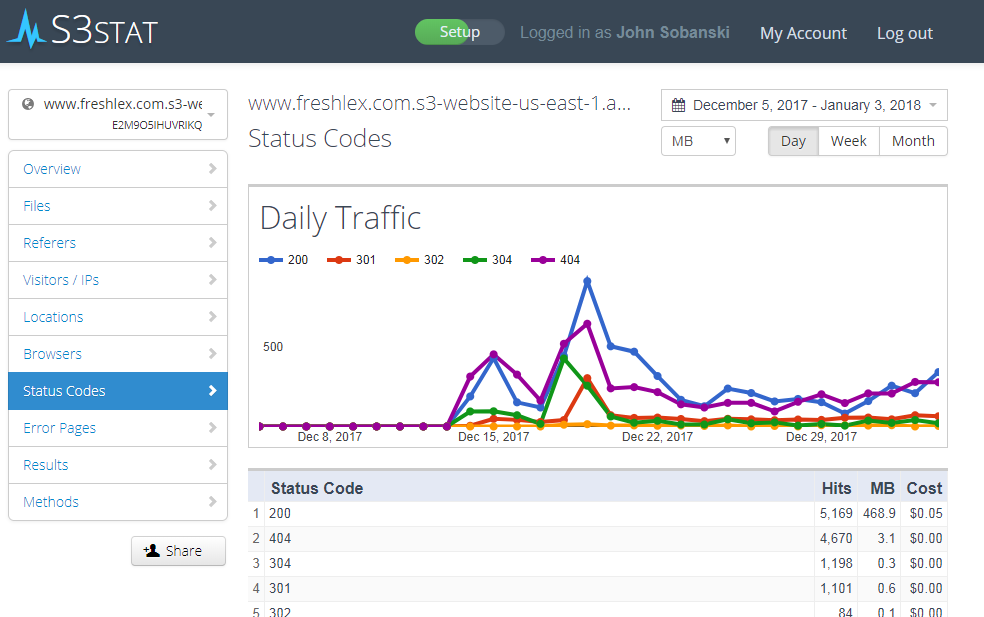

I clicked the other menu items and noticed that my new S3 hosted website threw a lot of error codes.

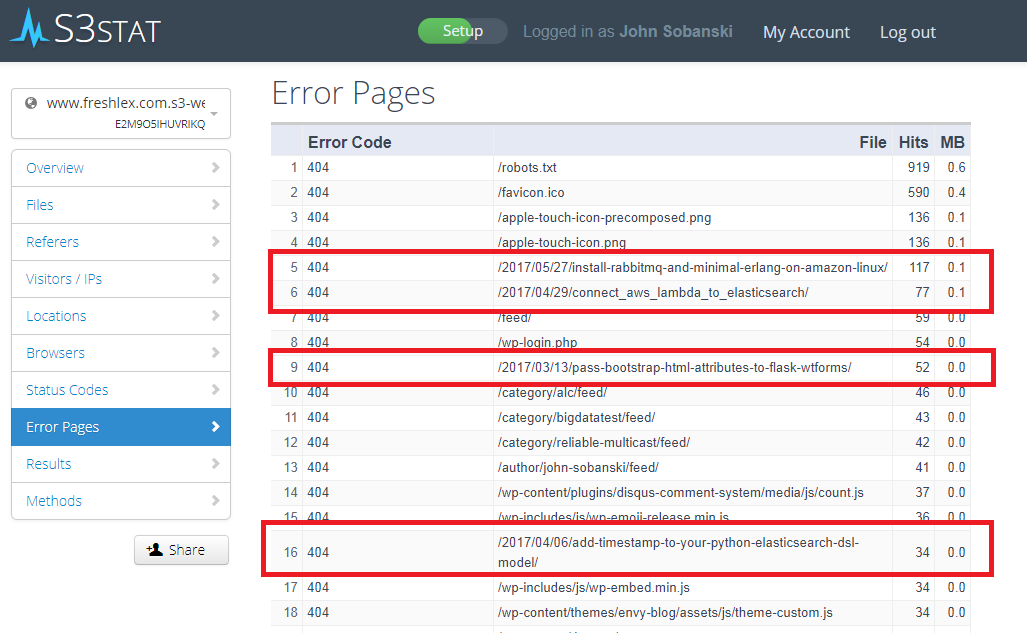

I noticed that people still clicked links from my old web page. I could tell because when I migrated from Wordpress to S3, I took the dates out of the URL. If a user bookmarked the Wordpress style link (which includes date), they would receive a 404 when they attempted to retrieve it. I highlighted the stale URLs in red below.

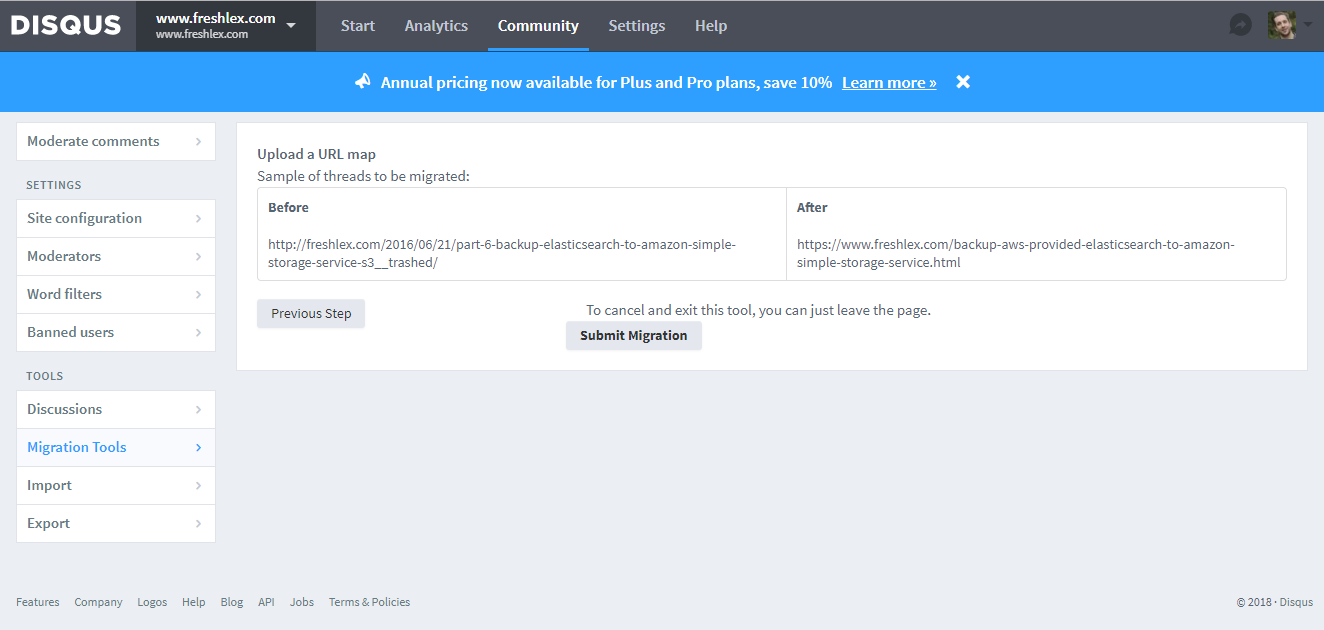

When I moved from Wordpress to S3, I submitted a URL map to their migration tool to migrate my comments to fit with my site's new URL approach.

I present a snippet of my URL map below. This map removes the date from the URL and sets the protocol to HTTPS.

http://freshlex.com/2017/03/13/pass-bootstrap-html-attributes-to-flask-wtforms/, https://www.freshlex.com/pass-bootstrap-html-attributes-to-flask-wtforms.html

http://freshlex.com/2017/04/06/add-timestamp-to-your-python-elasticsearch-dsl-model/, https://www.freshlex.com/add-timestamp-to-your-python-elasticsearch-dsl-model.html

http://freshlex.com/2017/04/29/connect_aws_lambda_to_elasticsearch/, https://www.freshlex.com/connect_aws_lambda_to_elasticsearch.html

http://freshlex.com/2017/05/27/install-rabbitmq-and-minimal-erlang-on-amazon-linux/, https://www.freshlex.com/install-rabbitmq-and-minimal-erlang-on-amazon-linux.html

I fix the dead link issue by uploading a copy of the current web page to a file location on S3 that matches the old Wordpress style. I wrote a script that performs this. I simply concatenate the contents of my URL map into the script, and the script creates the necessary directory structure to ensure the old Wordpress style links work (for those who bookmarked my old URL).

#!/bin/bash

cat url_map.csv | while read OLD NEW

do

DIR=`echo $OLD | cut -f4-7 -d'/'`

FILE=`echo $NEW | cut -f4 -d'/'`

mkdir -p $DIR

cd $DIR

ln -s ../../../../$FILE index.html

cd ../../../../

done

I upload the new files and directories to S3 and the old URLs now work. I want to encourage, however, users to use the new links, so I update robots.txt to exclude any of the old style URLs. Search engines, therefore, will ignore the old Wordpress style links.

User-agent: *

Disallow: /2016/

Disallow: /2017/

Sitemap: https://www.freshlex.com/freshlex_sitemap.xml

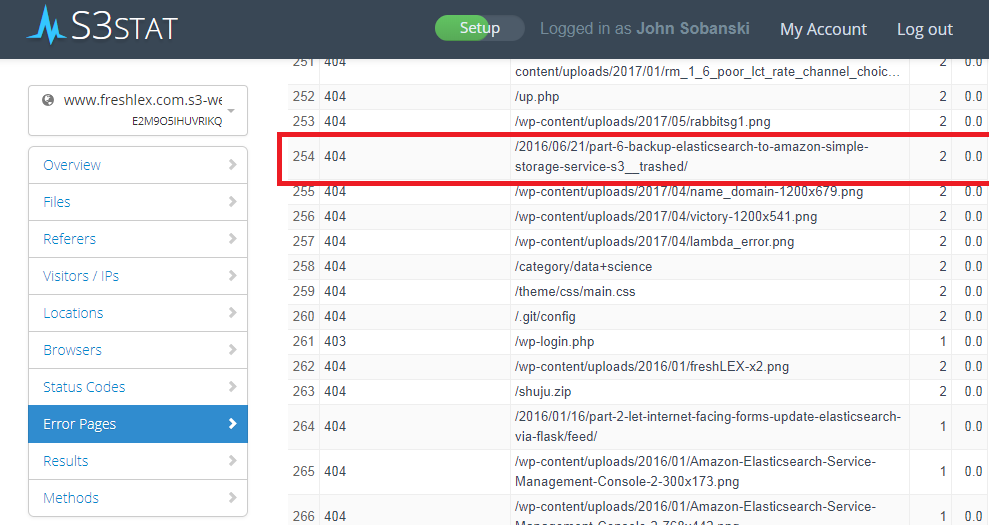

I use s3stat to sanity check the error pages and notice that one error returns a weird URL.

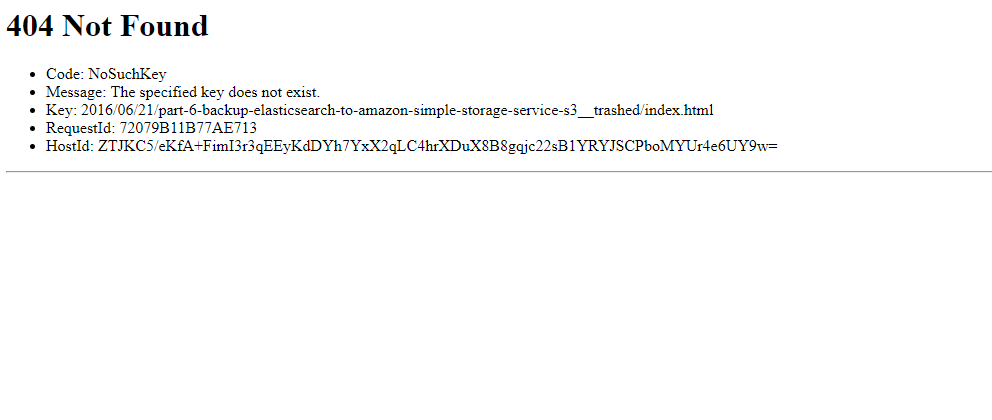

I attempt to click a stale link (that follows the Wordpress aproach) and of course get a hideous error.

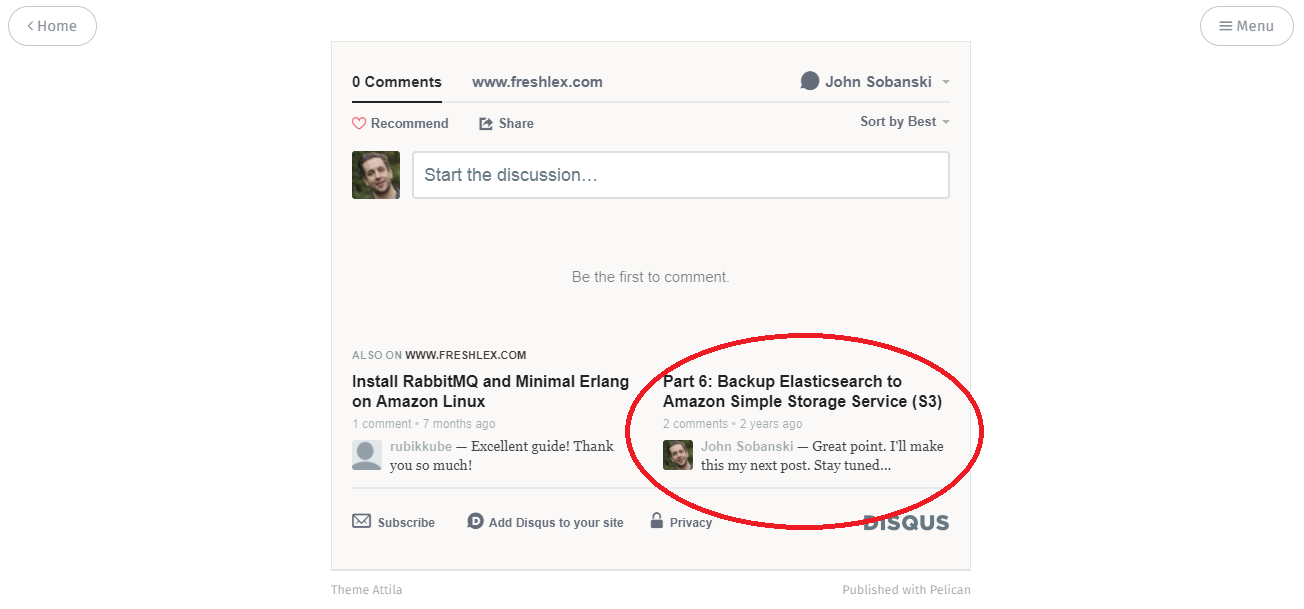

After some investigation, I notice that I did not include this weird URL in my URL map. It turns out, a user that goes to my site with the new links will see an 'also on Freshlex' callbox from Disqus that points to the old URL.

Thanks to s3stat, I identified the root cause of the issue. I quickly go back to Disqus, and add the weird URL to the migration tool.

After the migration tool works its magic, the 'also on' box now points to the correct, new URL.

Conclusion

Thanks again to s3stat for providing an excellent product, as well as hooking me up with a free lifetime subscription thanks to the cheap bastard plan!