NOTE: Click here to find an update to this blog post which uses Boto3 and Elasticsearch 7.X

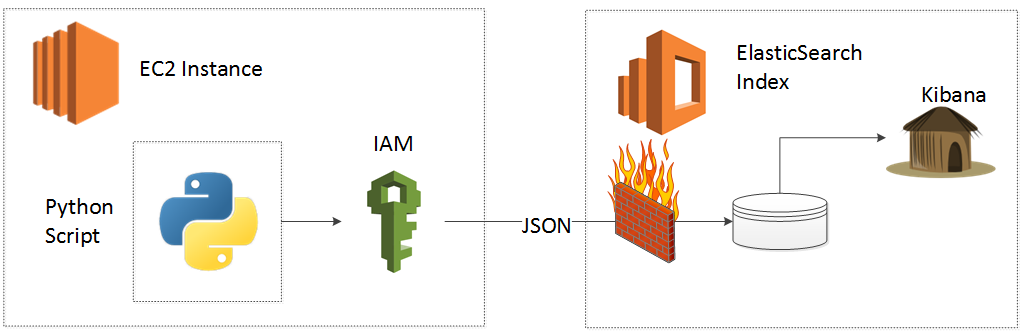

Step one of our journey connects EC2 to ES using the Amazon boto Python library. I spent more than a few hours pouring through the AWS help docs and pounding at my keyboard on my instances to figure out the easiest and most direct method to accomplish this. As of writing this blog, I found no decent HOWTO online on how to connect EC2 to the AWS provided ES so I put my chin up and started swinging. If you find that this article helps you to quickly get your job done, then please write a comment below (Even if it's just "thanks dude!") .

One more caveat before we begin. You need to trust me that the IAM role approach below will make your life easy. It's a quick, "pull of the bandaid" method that will save you a ton of headaches and troubleshooting. Unfortunately, the IAM role method has two downsides (1) It appears boring and complicated so your mind come up with reasons to skip it in order to comfort your precious ego (No offense, my mind pulls the same crap and I am not nearly as smart as you) and (2) It uses JSON, which to the uninitiated also appears boring and complicated. All I ask of you is twenty seconds of courage, to read my IAM instructions (which I spent hours simplifying), copy and paste and move on! Your alternatives (1) Copy and Paste your AWS credentials or (2) Use the old "Allow by IP" hack appear to be much simpler, but after the most basic integrations become maddeningly difficult and time wasting, if not impossible.

In this blog post you will:

1. Deploy an AWS Elasticsearch Instance

2. Create an IAM Role, Policy and Trust Relationship

3. Connect an EC2 Instance

Note: This blog post assumes you know how to SSH into an EC2 instance.

1. Deploy an AWS Elasticsearch Instance

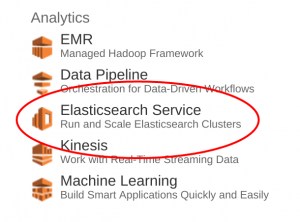

Amazon makes Elasticsearch deployment a snap. Just click the Elasticsearch Service icon on your management screen:

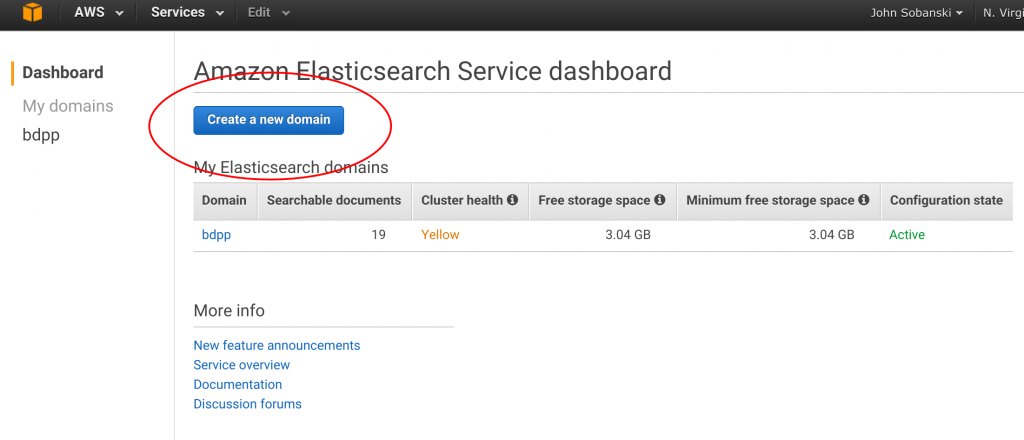

Then click "New Domain."

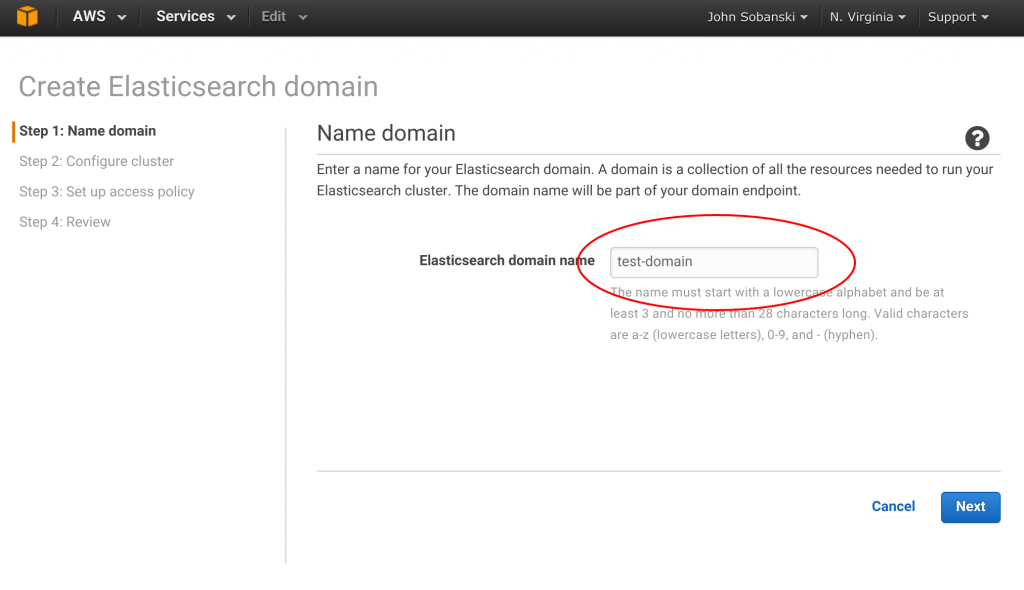

Name your domain "test-domain" (Or whatever).

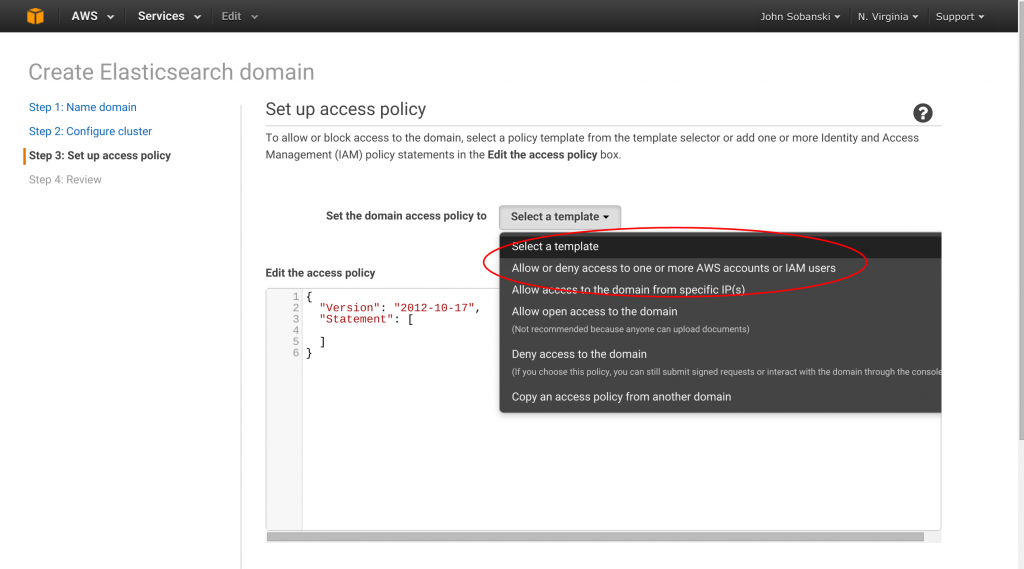

Keep the defaults on the next screen "Step 2: Configure Cluster." Just click "next." On the next screen, select: "Allow or deny access to one or more AWS accounts or IAM users". (Resist the temptation to "Allow by IP" ).

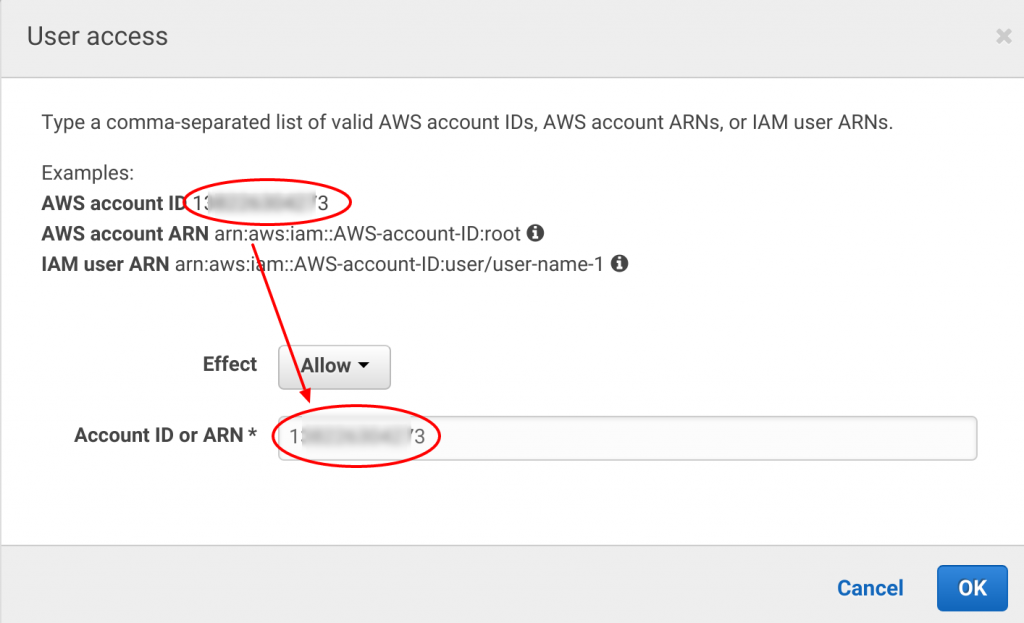

Amazon makes security easy as well. On the next menu they list your ARN. Just copy and paste it into the text field and hit "next."

AWS generates the JSON for your Elasticsearch service:

Click "Next" and then "confirm and create."

2. Create an IAM Role, Policy and Trust Relationship

2.1 Create an IAM Role

OK, deep breath. Just follow me and you will confront your IAM fears.

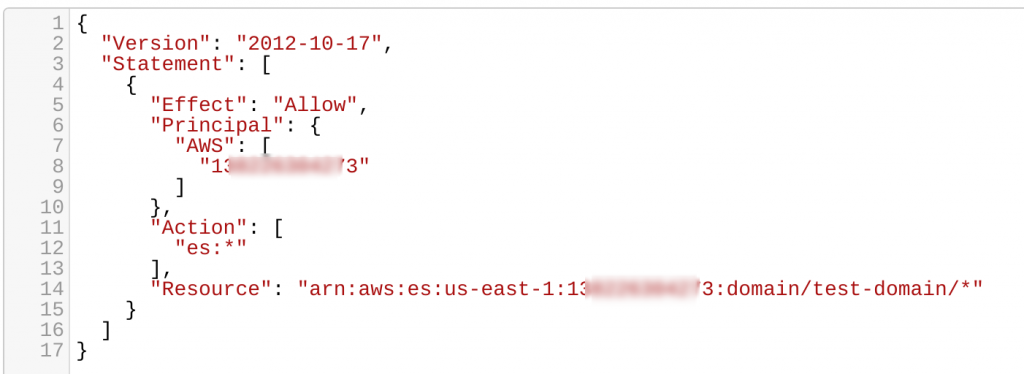

First, select "Identity and Access Management" from the AWS access console.

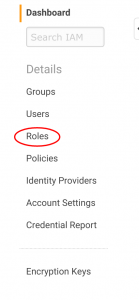

On the Dashboard, click "roles."

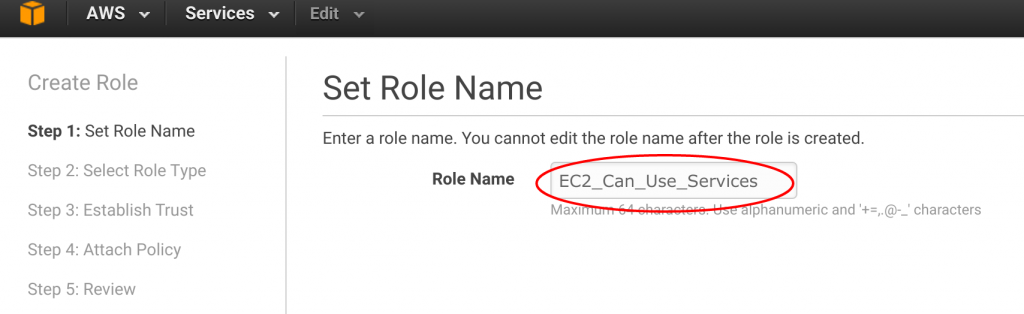

Next, click "create new role." I like to name the roles something obvious. Since we want to grant an EC2 instance (i.e. a Linux server) access to Amazon services, I picked the name "EC2_Can_Use_Services."

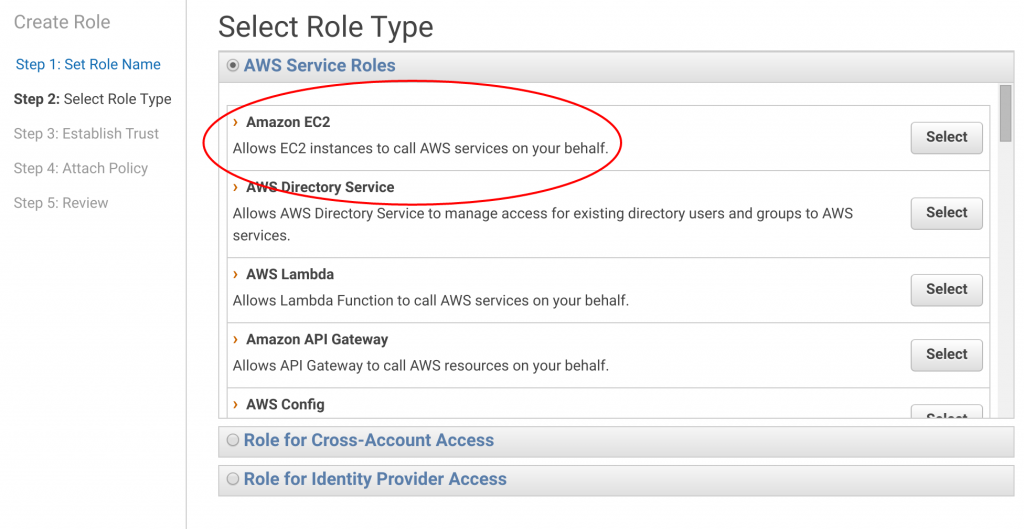

On the next screen, Amazon asks you to select from popular roles. Click on the first one "Allow EC2 instances to call AWS services on your behalf."

You're almost done! Just click through the next menu "Attach Policy."

We will worry about that in the next section. Just click "Next Step" and then "Create Role."

2.2 Create an IAM Policy

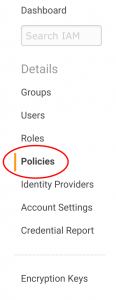

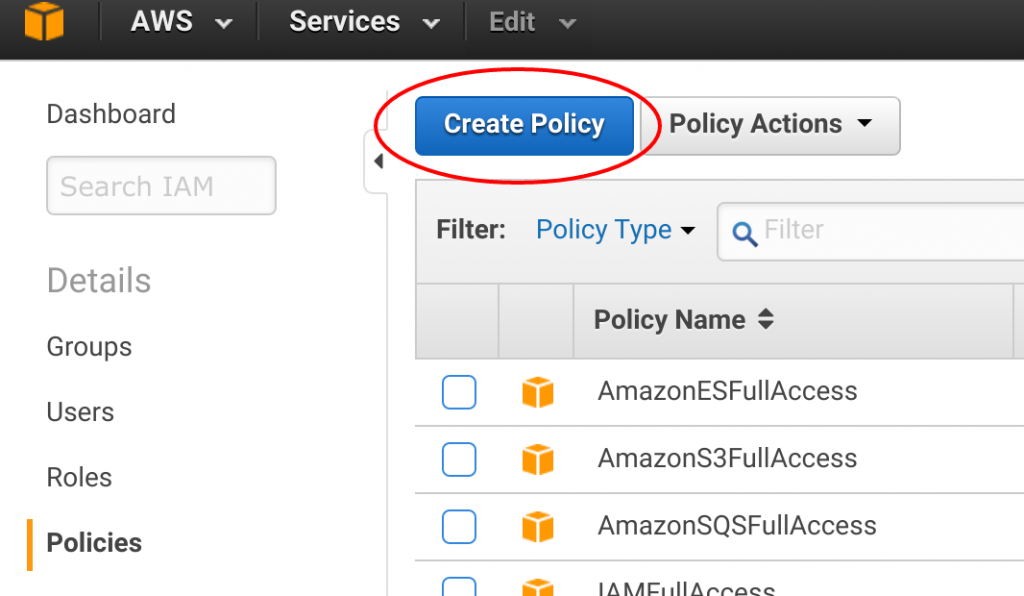

Now at the IAM Management dashboard, select "Policies."

Then, select "create policy" and then "create your own policy."

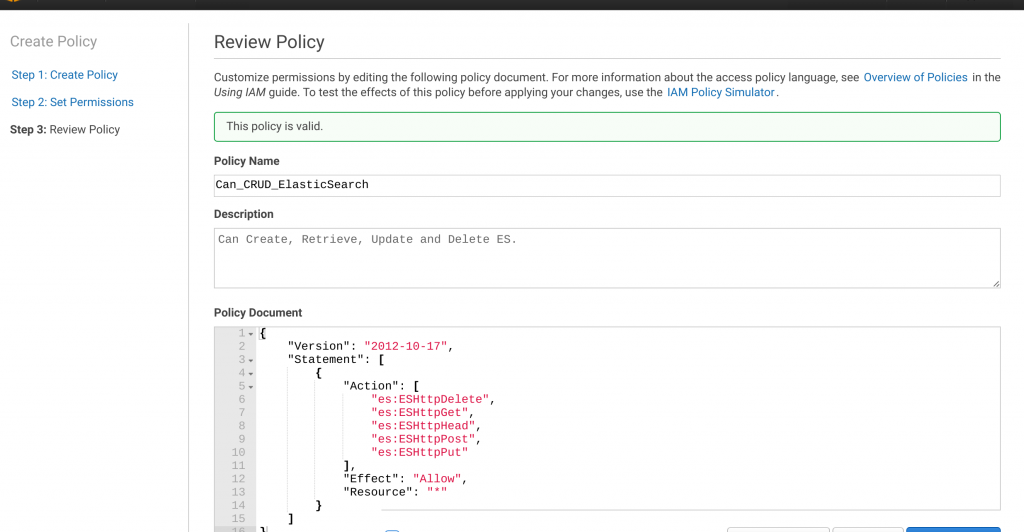

Name your policy "Can_CRUD_Elasticsearch," add a description and then copy and paste the following JSON into the "Policy Document:"

{

"Version": "2012-10-17",

"Statement": [

{

"Action": [

"es:ESHttpDelete",

"es:ESHttpGet",

"es:ESHttpHead",

"es:ESHttpPost",

"es:ESHttpPut"

],

"Effect": "Allow",

"Resource": "*"

}

]

}

If you click "Validate Policy," you will receive a message that it works. If you see "the policy is valid," click "create policy."

2.3 Attach your Policy

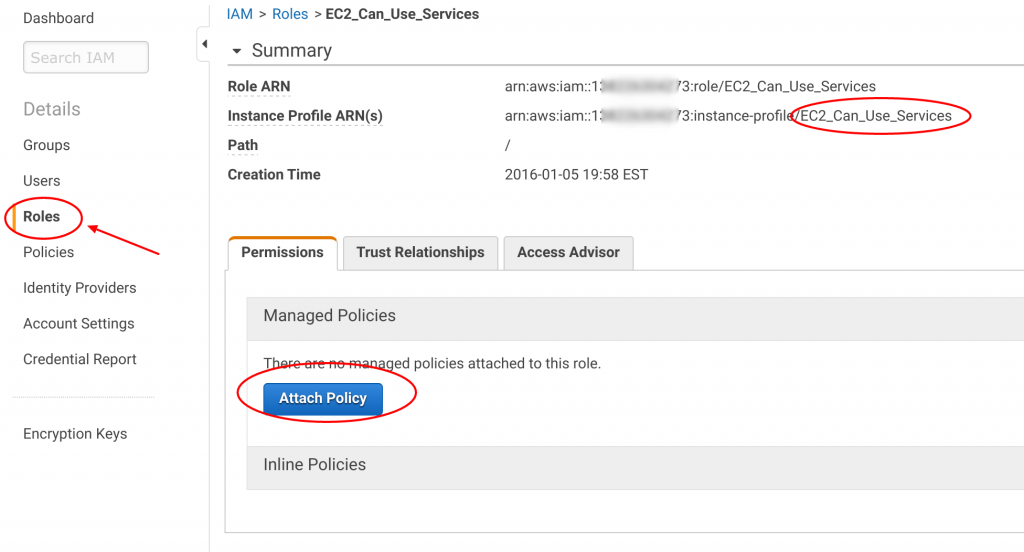

Go back to the Role you created, "EC2_Can_Use_Services," and click "attach policy."

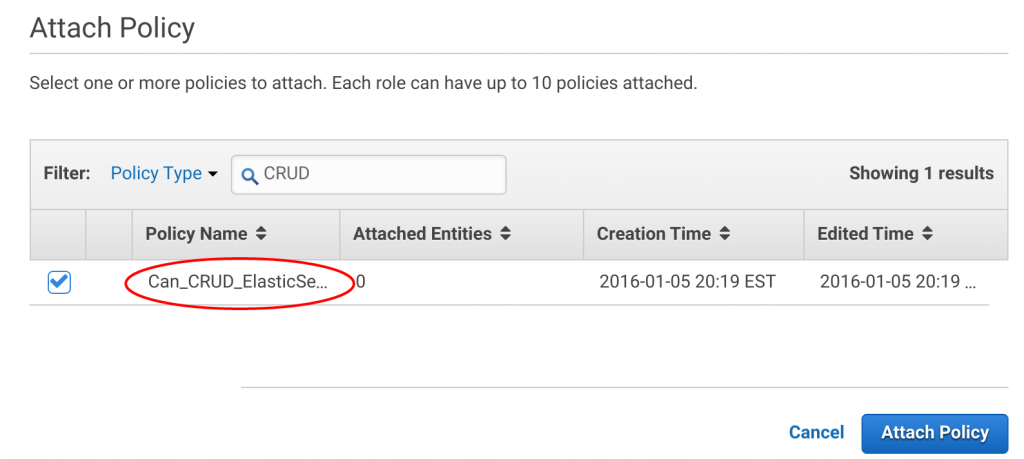

Now, in the search bar, type "CRUD" and you will see the Policy you just created, "Can_CRUD_Elasticsearch." Click this policy and then click "Attach Policy."

2.4 Trust Elasticsearch

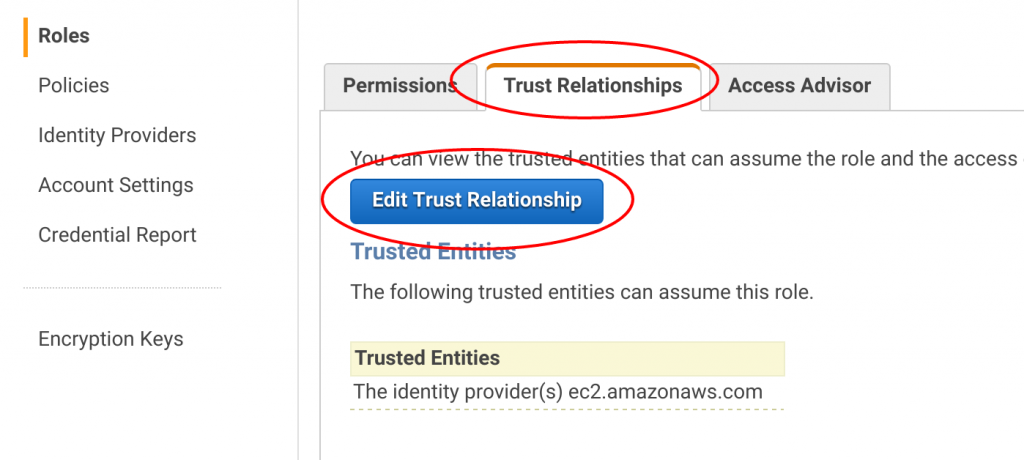

Now it's time for your victory lap! After you click "attach policy," AWS takes you back to the IAM Role dashboard. From here, click the "Trust Relationship" tab and click "Edit Trust Relationships."

Now edit the JSON to reflect the JSON below. You can copy and paste the JSON into the "Policy Document" field.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "ec2.amazonaws.com",

"Service": "es.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}

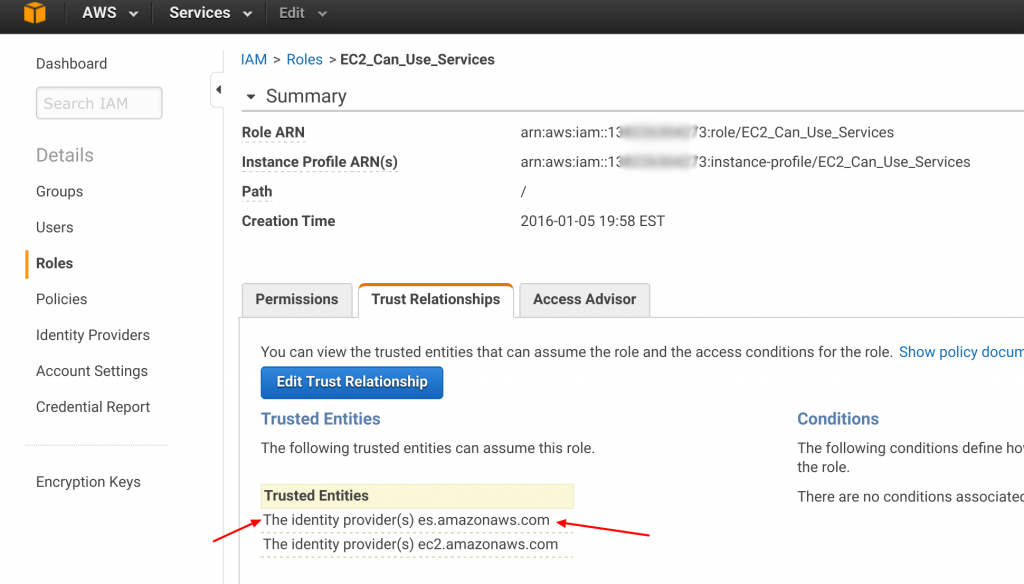

After you click "update trust relationship," your IAM Role Dashboard will read as follows:

Congrats! You made it through the hardest part!!!

3. Connect an EC2 instance

3.1 Launch an EC2 instance with the IAM role

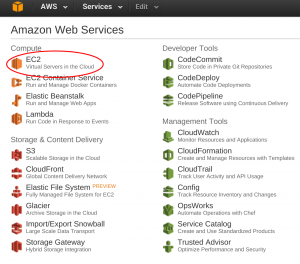

You can only attach an IAM role at instance creation. So, we will need to launch a brand new EC2 instance (no biggie). From the AWS Management Console, click EC2 and then "Launch Instance."

In "Step 1: Choose an AMI Instance," select "Ubuntu Server 14.04 LTS (HVM), SSD Volume Type."

In "Step 2: Choose an Instance Type" select t2.micro.

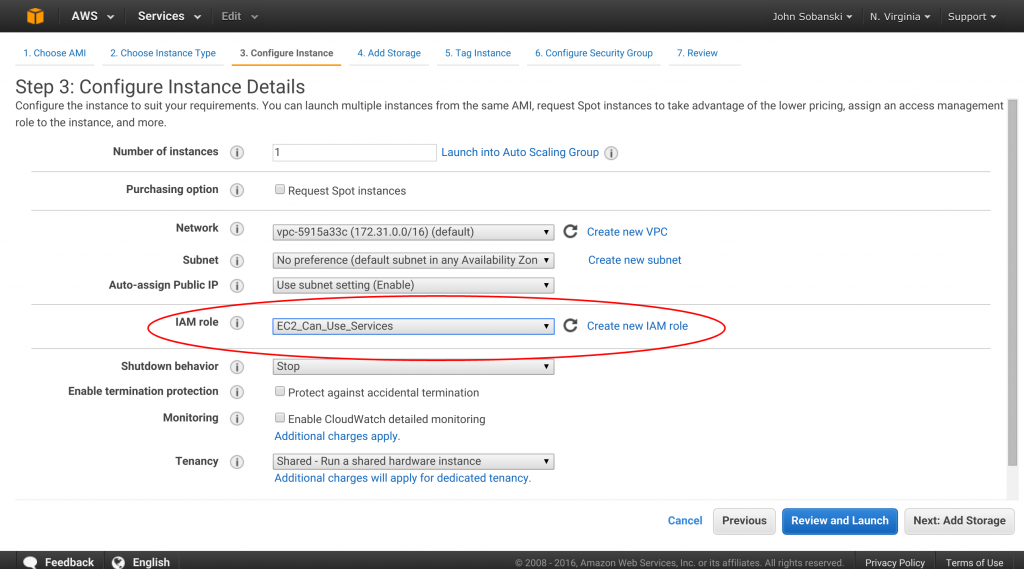

Now, this part is very important, in Step 3, be sure to pick your IAM role.

Now click "Review and Launch" and then launch. AWS will take a few minutes to launch the instance.

3.2 Configure the instance.

First up, you want to add your hostname to /etc/hosts, to remove any sudo warnings:

ubuntu@ip-172-31-35-80:~$ sudo vim /etc/hosts

Then, update your server.

ubuntu@ip-172-31-35-80:~$ sudo apt-get -y update

ubuntu@ip-172-31-35-80:~$ sudo apt-get -y dist-upgrade

Now install Python VirtualEnv. VirtualEnv allows you to make sense of any Python button mashing. You point VirtualEnv to a directory, type "activate" and then install packages to your hearts content without screwing with the rest of the server. Once you're done, you click "deactivate" and you're back to a clean distro. You can always go back to where you left off with the "activate" command.

ubuntu@ip-172-31-35-80:~$ sudo apt-get install -y python-virtualenv

You can activate an environment with a "." or "source" (or El Duderino if you're not into the whole brevity thing).

ubuntu@ip-172-31-35-80:~$ mkdir connect_to_es

ubuntu@ip-172-31-35-80:~$ virtualenv connect_to_es/

New python executable in connect_to_es/bin/python

Installing setuptools, pip...done.

ubuntu@ip-172-31-35-80:~$ . connect_to_es/bin/activate

(connect_to_es)ubuntu@ip-172-31-35-80:~$ cd connect_to_es/

(connect_to_es)ubuntu@ip-172-31-35-80:~/connect_to_es$

Notice that the shell prompt lists the name of your virtual environment (connect_to_es). Since you're in a virtual sandbox, you can pip install (or easy_install) to your heart's content.

(connect_to_es)ubuntu@ip-172-31-35-80:~/connect_to_es$ easy_install boto

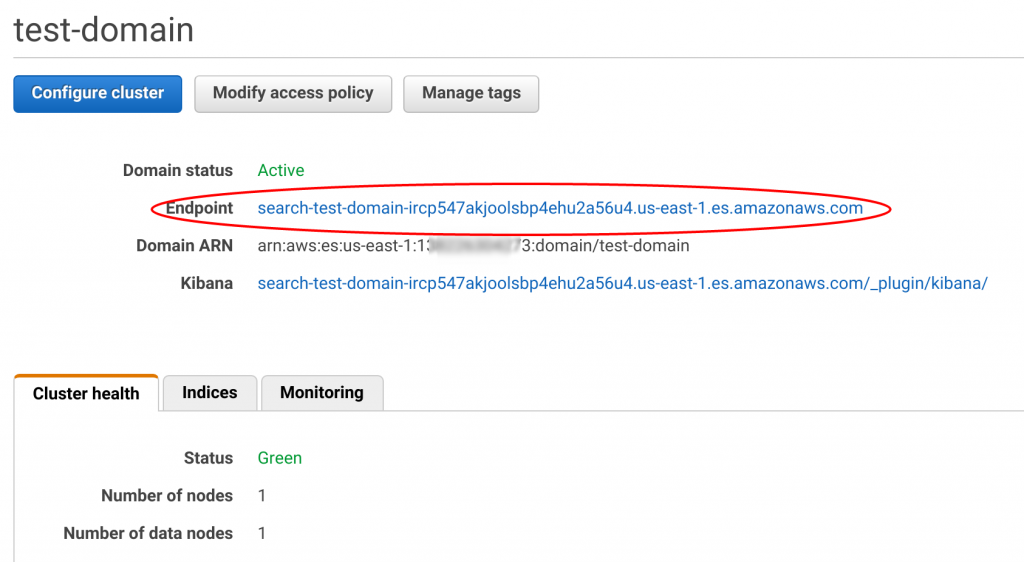

Go back to the AWS Management console, click the Elasticsearch service and copy the address for your endpoint.

Now, edit the following Python script with your Endpoint address for "host."

# connect_test.py

from boto.connection import AWSAuthConnection

class ESConnection(AWSAuthConnection):

def __init__(self, region, **kwargs):

super(ESConnection, self).__init__(**kwargs)

self._set_auth_region_name(region)

self._set_auth_service_name("es")

def _required_auth_capability(self):

return ['hmac-v4']

if __name__ == "__main__":

client = ESConnection(

region='us-east-1',

# Be sure to put the URL for your Elasticsearch endpoint below!

host='search-test-domain-ircp547akjoolsbp4ehu2a56u4.us-east-1.es.amazonaws.com',

is_secure=False)

resp = client.make_request(method='GET',path='/')

print resp.read()

Then (from your Virtual Environment), execute the script.

(connect_to_es)ubuntu@ip-172-31-35-80:~/connect_to_es$ python connect_test.py

And you will get your result...

{

"status" : 200,

"name" : "Chemistro",

"cluster_name" : "XXXXXXXXXXXX:test-domain",

"version" : {

"number" : "1.5.2",

"build_hash" : "62ff9868b4c8a0c45860bebb259e21980778ab1c",

"build_timestamp" : "2015-04-27T09:21:06Z",

"build_snapshot" : false,

"lucene_version" : "4.10.4"

},

"tagline" : "You Know, for Search"

}

Think about what you just did. You connected to an AWS provided Elasticsearch service without any need to copy in paste your AWS_ACCESS_KEY or AWS_SECRET_KEY. You did not need to figure out the Public IP address of your EC2 instance in order to update the security policy of the Elasticsearch service. Given these two points, you can easily spin up spot instances and have them connect to Elasticsearch by simply (1) Ensuring they have the same ARN (by default they will) and (2) Ensuring you point to the proper IAM role at creation time. This process will come in handy when you connect ElasticBeanstalk to the Elasticsearch service, which we will do in part 2 of this HOWTO!